=================

UPDATE 10/17/2016

=================

I initiated KSM&&KSMTUNED on CentOS 7.2 VIRTHOST running instack-virt-setup 2 days ago along with instack-virt-setup HA overcloud deployment

Stack's virthost's .bashrc

[stack@Server72Centos ~]$ cat .bashrc

# .bashrc

# Source global definitions

if [ -f /etc/bashrc ]; then

. /etc/bashrc

fi

# Uncomment the following line if you don't like systemctl's auto-paging feature:

# export SYSTEMD_PAGER=

export NODE_DIST=centos7

export NODE_CPU=2

export NODE_MEM=6500

export NODE_COUNT=4

export UNDERCLOUD_NODE_CPU=2

export UNDERCLOUD_NODE_MEM=8000

export NODE_DISK=45

export UNDERCLOUD_NODE_DISK=35

export FS_TYPE=ext4

# User specific aliases and functions

export LIBVIRT_DEFAULT_URI="qemu:///system"

So far I don't see any negative drawback from ksmd daemon up and running on

VIRTHOST ( HA Overcloud been built via instack-virt-setup). I also have to

notice that performance problems caused by swap memory utilization around

3.5 GB are eliminated. Overcloud KVM nodes demonstrate perfomance close

to TripleO QuickStart ( in meantime unavailable for RDO Newton -> trunk/newton)

=============

END UPDATE

=============

Posting bellow is supposed to demonstrate KSM implementation on QuickStart

providing significant relief on 32 GB VIRTHOST vs quite the same deploymet

described in previous draft http://lxer.com/module/newswire/view/234740/index.html

Due to Subject: [PATCH] Add swap to the undercloud when using an overcloud image setups for QuickStart and for instack-virt-setup appear to allocate originally same memory for undercloud and HA Controllers in 3 Node PCS Cluster

Per https://en.wikipedia.org/wiki/Kernel_same-page_merging

Kernel same-page merging

In computing, kernel same-page merging (abbreviated as KSM, and also known

as kernel shared memory and memory merging) is a kernel feature that makes

it possible for a hypervisor system to share identical memory pages amongst

different processes or virtualized guests. While not directly linked, Kernel-based

Virtual Machine (KVM) can use KSM to merge memory pages occupied by virtual machines.

KSM performs the memory sharing by scanning through the main memory and

finding duplicate pages. Each detected duplicate pair is then merged into a single page, and mapped into both original locations. The page is also marked as "copy-on-write" (COW), so the kernel will automatically separate them again should one process modify its data.

Deployment procedure for TripleO QuickStart is bit more complicated in meantime then it was designed for Mitaka stable release. Instructions bellow provide a step by step guide usually not required by QuickStart environment on undercloud VM after you logged into undercloud

Git clone repo bellow :-

[jon@fedora24wks release]$ git clone https://github.com/openstack/tripleo-quickstart

[jon@fedora24wks release]$ cd tripleo* ; cd ./config/release

**********************************************

Now verify that newton.yml is here.

**********************************************

[jon@fedora24wks release]$ cat newton.yml

release: newton

undercloud_image_url: http://buildlogs.centos.org/centos/7/cloud/x86_64/tripleo_images/newton/delorean/undercloud.qcow2

overcloud_image_url: http://buildlogs.centos.org/centos/7/cloud/x86_64/tripleo_images/newton/delorean/overcloud-full.tar

ipa_image_url: http://buildlogs.centos.org/centos/7/cloud/x86_64/tripleo_images/newton/delorean/ironic-python-agent.tar

**************************************************************************************

UPDATE (./config/general_config/ha.yml ) memory allocation for HA controller

up to 6.5 GB ( as minimum to avoid crash in step5 of overcloud deployment )

**************************************************************************************

[john@fedora24wks tripleo-quickstart]$ cat ./config/general_config/ha.yml

# Deploy an HA openstack environment.

#

# This will require (6144 * 4) == approx. 24GB for the overcloud

# nodes, plus another 8GB for the undercloud, for a total of around

# 32GB.

control_memory: 6500

compute_memory: 6144

undercloud_memory: 8192

# Giving the undercloud additional CPUs can greatly improve heat's

# performance (and result in a shorter deploy time).

undercloud_vcpu: 4

# Create three controller nodes and one compute node.

overcloud_nodes:

- name: control_0

flavor: control

- name: control_1

flavor: control

- name: control_2

flavor: control

- name: compute_0

flavor: compute

# We don't need introspection in a virtual environment (because we are

# creating all the "hardware" we really know the necessary

# information).

step_introspect: false

# Tell tripleo about our environment.

network_isolation: true

extra_args: >-

--control-scale 3 --compute-scale 1 --neutron-network-type vxlan

--neutron-tunnel-types vxlan

--ntp-server pool.ntp.org

test_ping: true

enable_pacemaker: true

tempest_config: false

run_tempest: false

****************************************************************************

Run quickstart.sh to create undercloud VM on VIRTHOST

****************************************************************************

[john@fedora24wks tripleo-quickstart]$ bash quickstart.sh -R newton --config ./config/general_config/ha.yml $VIRTHOST

Login to undercloud when done

[john@fedora24wks tripleo-quickstart]$ ssh -F /home/john/.quickstart/ssh.config.ansible undercloud

********************************************************************************************

In meantime QuickStart requires manual overcloud deployment

Now you are logged into undecloud VM running on VIRTHOST as stack

Building overcloud images is skipped due to QuickStart CI. There is no harm in attempt of building them. It will take a second, they are already there.

********************************************************************************************

Create external interface vlan10

*************************************

[stack@undercloud ~]$ sudo vi /etc/sysconfig/network-scripts/ifcfg-vlan10

DEVICE=vlan10

ONBOOT=yes

DEVICETYPE=ovs

TYPE=OVSIntPort

BOOTPROTO=static

IPADDR=10.0.0.1

NETMASK=255.255.255.0

OVS_BRIDGE=br-ctlplane

OVS_OPTIONS="tag=10"

[stack@undercloud ~]$ sudo ifup vlan10

[stack@undercloud ~]$ sudo ovs-vsctl show

0d9f9351-93cd-4c83-8eb4-82e8b1ca6665

Manager "ptcp:6640:127.0.0.1"

is_connected: true

Bridge br-ctlplane

Controller "tcp:127.0.0.1:6633"

is_connected: true

fail_mode: secure

Port br-ctlplane

Interface br-ctlplane

type: internal

Port phy-br-ctlplane

Interface phy-br-ctlplane

type: patch

options: {peer=int-br-ctlplane}

Port "eth1"

Interface "eth1"

Port "vlan10"

tag: 10

Interface "vlan10"

type: internal

Bridge br-int

Controller "tcp:127.0.0.1:6633"

is_connected: true

fail_mode: secure

Port int-br-ctlplane

Interface int-br-ctlplane

type: patch

options: {peer=phy-br-ctlplane}

Port br-int

Interface br-int

type: internal

Port "tapb0b80495-42"

tag: 1

Interface "tapb0b80495-42"

type: internal

ovs_version: "2.5.0"

*********************************************

Create manually network_env.yaml

*********************************************

[stack@instack ~]$vi network_env.yaml

{

"parameter_defaults": {

"ControlPlaneDefaultRoute": "192.0.2.1",

"ControlPlaneSubnetCidr": "24",

"DnsServers": [

"192.168.122.1"

],

"EC2MetadataIp": "192.0.2.1",

"ExternalAllocationPools": [

{

"end": "10.0.0.250",

"start": "10.0.0.4"

}

],

"ExternalNetCidr": "10.0.0.1/24",

"NeutronExternalNetworkBridge": ""

}

}

$ sudo iptables -A BOOTSTACK_MASQ -s 10.0.0.0/24 ! -d 10.0.0.0/24 -j MASQUERADE -t nat

was done in RDO Newton Overcloud HA deployment via instack-virt-setup on CentOS 7.2 VIRTHOST

Compare numbers under SHR header in reports provided down here.

Cloud F24 VM is running on overcloud-novacompute-0

Quite the same configuration been done by instack-virt-setup

Swap area utilization at least 2.5 GB ( up to 3.5 GB ) during cloud VM F24 runtime.

***************************************************************************************

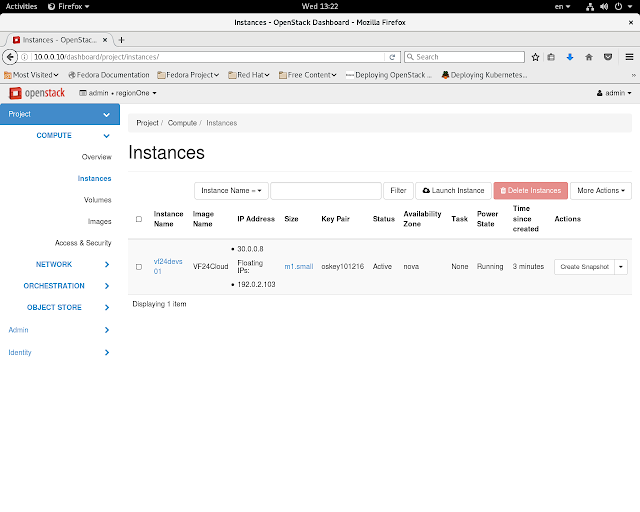

System information provided via dashboard ( remote sshuttle connection )

***************************************************************************************

UPDATE 10/17/2016

=================

I initiated KSM&&KSMTUNED on CentOS 7.2 VIRTHOST running instack-virt-setup 2 days ago along with instack-virt-setup HA overcloud deployment

Stack's virthost's .bashrc

[stack@Server72Centos ~]$ cat .bashrc

# .bashrc

# Source global definitions

if [ -f /etc/bashrc ]; then

. /etc/bashrc

fi

# Uncomment the following line if you don't like systemctl's auto-paging feature:

# export SYSTEMD_PAGER=

export NODE_DIST=centos7

export NODE_CPU=2

export NODE_MEM=6500

export NODE_COUNT=4

export UNDERCLOUD_NODE_CPU=2

export UNDERCLOUD_NODE_MEM=8000

export NODE_DISK=45

export UNDERCLOUD_NODE_DISK=35

export FS_TYPE=ext4

# User specific aliases and functions

export LIBVIRT_DEFAULT_URI="qemu:///system"

So far I don't see any negative drawback from ksmd daemon up and running on

VIRTHOST ( HA Overcloud been built via instack-virt-setup). I also have to

notice that performance problems caused by swap memory utilization around

3.5 GB are eliminated. Overcloud KVM nodes demonstrate perfomance close

to TripleO QuickStart ( in meantime unavailable for RDO Newton -> trunk/newton)

=============

END UPDATE

=============

Posting bellow is supposed to demonstrate KSM implementation on QuickStart

providing significant relief on 32 GB VIRTHOST vs quite the same deploymet

described in previous draft http://lxer.com/module/newswire/view/234740/index.html

Due to Subject: [PATCH] Add swap to the undercloud when using an overcloud image setups for QuickStart and for instack-virt-setup appear to allocate originally same memory for undercloud and HA Controllers in 3 Node PCS Cluster

Per https://en.wikipedia.org/wiki/Kernel_same-page_merging

Kernel same-page merging

In computing, kernel same-page merging (abbreviated as KSM, and also known

as kernel shared memory and memory merging) is a kernel feature that makes

it possible for a hypervisor system to share identical memory pages amongst

different processes or virtualized guests. While not directly linked, Kernel-based

Virtual Machine (KVM) can use KSM to merge memory pages occupied by virtual machines.

KSM performs the memory sharing by scanning through the main memory and

finding duplicate pages. Each detected duplicate pair is then merged into a single page, and mapped into both original locations. The page is also marked as "copy-on-write" (COW), so the kernel will automatically separate them again should one process modify its data.

Deployment procedure for TripleO QuickStart is bit more complicated in meantime then it was designed for Mitaka stable release. Instructions bellow provide a step by step guide usually not required by QuickStart environment on undercloud VM after you logged into undercloud

Git clone repo bellow :-

[jon@fedora24wks release]$ git clone https://github.com/openstack/tripleo-quickstart

[jon@fedora24wks release]$ cd tripleo* ; cd ./config/release

**********************************************

Now verify that newton.yml is here.

**********************************************

[jon@fedora24wks release]$ cat newton.yml

release: newton

undercloud_image_url: http://buildlogs.centos.org/centos/7/cloud/x86_64/tripleo_images/newton/delorean/undercloud.qcow2

overcloud_image_url: http://buildlogs.centos.org/centos/7/cloud/x86_64/tripleo_images/newton/delorean/overcloud-full.tar

ipa_image_url: http://buildlogs.centos.org/centos/7/cloud/x86_64/tripleo_images/newton/delorean/ironic-python-agent.tar

**************************************************************************************

UPDATE (./config/general_config/ha.yml ) memory allocation for HA controller

up to 6.5 GB ( as minimum to avoid crash in step5 of overcloud deployment )

**************************************************************************************

[john@fedora24wks tripleo-quickstart]$ cat ./config/general_config/ha.yml

# Deploy an HA openstack environment.

#

# This will require (6144 * 4) == approx. 24GB for the overcloud

# nodes, plus another 8GB for the undercloud, for a total of around

# 32GB.

control_memory: 6500

compute_memory: 6144

undercloud_memory: 8192

# Giving the undercloud additional CPUs can greatly improve heat's

# performance (and result in a shorter deploy time).

undercloud_vcpu: 4

# Create three controller nodes and one compute node.

overcloud_nodes:

- name: control_0

flavor: control

- name: control_1

flavor: control

- name: control_2

flavor: control

- name: compute_0

flavor: compute

# We don't need introspection in a virtual environment (because we are

# creating all the "hardware" we really know the necessary

# information).

step_introspect: false

# Tell tripleo about our environment.

network_isolation: true

extra_args: >-

--control-scale 3 --compute-scale 1 --neutron-network-type vxlan

--neutron-tunnel-types vxlan

--ntp-server pool.ntp.org

test_ping: true

enable_pacemaker: true

tempest_config: false

run_tempest: false

****************************************************************************

Run quickstart.sh to create undercloud VM on VIRTHOST

****************************************************************************

[john@fedora24wks tripleo-quickstart]$ bash quickstart.sh -R newton --config ./config/general_config/ha.yml $VIRTHOST

Login to undercloud when done

[john@fedora24wks tripleo-quickstart]$ ssh -F /home/john/.quickstart/ssh.config.ansible undercloud

********************************************************************************************

In meantime QuickStart requires manual overcloud deployment

Now you are logged into undecloud VM running on VIRTHOST as stack

Building overcloud images is skipped due to QuickStart CI. There is no harm in attempt of building them. It will take a second, they are already there.

********************************************************************************************

# Upload per-built overcloud images

[stack@undercloud ~]$ source stackrc [stack@undercloud ~]$ openstack overcloud image upload [stack@undercloud ~]$ openstack baremetal import instackenv.json [stack@undercloud ~]$ openstack baremetal configure boot [stack@undercloud ~]$ openstack baremetal introspection bulk start [stack@undercloud ~]$ ironic node-list

[stack@undercloud ~]$ neutron subnet-list

[stack@undercloud ~]$ neutron subnet-update 1b7d82e5-0bf1-4ba5-8008-4aa402598065 \

--dns-nameserver 192.168.122.1

**************************************

Create external interface vlan10

*************************************

[stack@undercloud ~]$ sudo vi /etc/sysconfig/network-scripts/ifcfg-vlan10

DEVICE=vlan10

ONBOOT=yes

DEVICETYPE=ovs

TYPE=OVSIntPort

BOOTPROTO=static

IPADDR=10.0.0.1

NETMASK=255.255.255.0

OVS_BRIDGE=br-ctlplane

OVS_OPTIONS="tag=10"

[stack@undercloud ~]$ sudo ifup vlan10

[stack@undercloud ~]$ sudo ovs-vsctl show

0d9f9351-93cd-4c83-8eb4-82e8b1ca6665

Manager "ptcp:6640:127.0.0.1"

is_connected: true

Bridge br-ctlplane

Controller "tcp:127.0.0.1:6633"

is_connected: true

fail_mode: secure

Port br-ctlplane

Interface br-ctlplane

type: internal

Port phy-br-ctlplane

Interface phy-br-ctlplane

type: patch

options: {peer=int-br-ctlplane}

Port "eth1"

Interface "eth1"

Port "vlan10"

tag: 10

Interface "vlan10"

type: internal

Bridge br-int

Controller "tcp:127.0.0.1:6633"

is_connected: true

fail_mode: secure

Port int-br-ctlplane

Interface int-br-ctlplane

type: patch

options: {peer=phy-br-ctlplane}

Port br-int

Interface br-int

type: internal

Port "tapb0b80495-42"

tag: 1

Interface "tapb0b80495-42"

type: internal

ovs_version: "2.5.0"

*********************************************

Create manually network_env.yaml

*********************************************

[stack@instack ~]$vi network_env.yaml

{

"parameter_defaults": {

"ControlPlaneDefaultRoute": "192.0.2.1",

"ControlPlaneSubnetCidr": "24",

"DnsServers": [

"192.168.122.1"

],

"EC2MetadataIp": "192.0.2.1",

"ExternalAllocationPools": [

{

"end": "10.0.0.250",

"start": "10.0.0.4"

}

],

"ExternalNetCidr": "10.0.0.1/24",

"NeutronExternalNetworkBridge": ""

}

}

$ sudo iptables -A BOOTSTACK_MASQ -s 10.0.0.0/24 ! -d 10.0.0.0/24 -j MASQUERADE -t nat

[stack@undercloud ~]$ sudo touch -f \

/usr/share/openstack-tripleo-heat-templates/puppet/post.yaml

[stack@undercloud ~]$ cat overcloud-deploy.sh

#!/bin/bash -x

source /home/stack/stackrc

openstack overcloud deploy \

--control-scale 3 --compute-scale 1 \

--libvirt-type qemu \

--ntp-server pool.ntp.org \

--templates /usr/share/openstack-tripleo-heat-templates \

-e /usr/share/openstack-tripleo-heat-templates/environments/puppet-pacemaker.yaml \

-e /usr/share/openstack-tripleo-heat-templates/environments/network-isolation.yaml \

-e /usr/share/openstack-tripleo-heat-templates/environments/net-single-nic-with-vlans.yaml \

-e $HOME/network_env.yaml [stack@undercloud ~]$ ./overcloud-deploy.sh

+ source /home/stack/stackrc ++ export NOVA_VERSION=1.1 ++ NOVA_VERSION=1.1 +++ sudo hiera admin_password ++ export OS_PASSWORD=a8cf847f82ff64b158afde70183b268fecd9f492 ++ OS_PASSWORD=a8cf847f82ff64b158afde70183b268fecd9f492 ++ export OS_AUTH_URL=http://192.0.2.1:5000/v2.0 ++ OS_AUTH_URL=http://192.0.2.1:5000/v2.0 ++ export OS_USERNAME=admin ++ OS_USERNAME=admin ++ export OS_TENANT_NAME=admin ++ OS_TENANT_NAME=admin ++ export COMPUTE_API_VERSION=1.1 ++ COMPUTE_API_VERSION=1.1 ++ export OS_BAREMETAL_API_VERSION=1.15 ++ OS_BAREMETAL_API_VERSION=1.15 ++ export OS_NO_CACHE=True ++ OS_NO_CACHE=True ++ export OS_CLOUDNAME=undercloud ++ OS_CLOUDNAME=undercloud ++ export OS_IMAGE_API_VERSION=1 ++ OS_IMAGE_API_VERSION=1 + openstack overcloud deploy --control-scale 3 --compute-scale 1 --libvirt-type qemu --ntp-server pool.ntp.org --templates /usr/share/openstack-tripleo-heat-templates -e /usr/share/openstack-tripleo-heat-templates/environments/puppet-pacemaker.yaml -e /usr/share/openstack-tripleo-heat-templates/environments/network-isolation.yaml -e /usr/share/openstack-tripleo-heat-templates/environments/net-single-nic-with-vlans.yaml -e /home/stack/network_env.yaml WARNING: openstackclient.common.utils is deprecated and will be removed after Jun 2017. Please use osc_lib.utils Removing the current plan files Uploading new plan files Started Mistral Workflow. Execution ID: 5511b4a9-4d0c-4937-9450-e2d9e7e36ab3 Plan updated Deploying templates in the directory /tmp/tripleoclient-LwH7ZR/tripleo-heat-templates Started Mistral Workflow. Execution ID: 5e331cfa-4b4e-49dd-bc4c-89b50aa42740 2016-10-12 07:30:50Z [overcloud]: CREATE_IN_PROGRESS Stack CREATE started 2016-10-12 07:30:51Z [overcloud.HorizonSecret]: CREATE_IN_PROGRESS state changed 2016-10-12 07:30:52Z [overcloud.PcsdPassword]: CREATE_IN_PROGRESS state changed 2016-10-12 07:30:52Z [overcloud.MysqlRootPassword]: CREATE_IN_PROGRESS state changed 2016-10-12 07:30:52Z [overcloud.ServiceNetMap]: CREATE_IN_PROGRESS state changed 2016-10-12 07:30:53Z [overcloud.HeatAuthEncryptionKey]: CREATE_IN_PROGRESS state changed 2016-10-12 07:30:53Z [overcloud.RabbitCookie]: CREATE_IN_PROGRESS state changed 2016-10-12 07:30:54Z [overcloud.Networks]: CREATE_IN_PROGRESS state changed 2016-10-12 07:30:54Z [overcloud.PcsdPassword]: CREATE_COMPLETE state changed 2016-10-12 07:30:55Z [overcloud.ServiceNetMap]: CREATE_COMPLETE state changed 2016-10-12 07:30:55Z [overcloud.HeatAuthEncryptionKey]: CREATE_COMPLETE state changed 2016-10-12 07:30:55Z [overcloud.Networks]: CREATE_IN_PROGRESS Stack CREATE started 2016-10-12 07:30:55Z [overcloud.MysqlRootPassword]: CREATE_COMPLETE state changed 2016-10-12 07:30:55Z [overcloud.RabbitCookie]: CREATE_COMPLETE state changed 2016-10-12 07:30:55Z [overcloud.Networks.StorageMgmtNetwork]: CREATE_IN_PROGRESS state changed 2016-10-12 07:30:56Z [overcloud.HorizonSecret]: CREATE_COMPLETE state changed 2016-10-12 07:30:56Z [overcloud.DefaultPasswords]: CREATE_IN_PROGRESS state changed 2016-10-12 07:30:56Z [overcloud.Networks.TenantNetwork]: CREATE_IN_PROGRESS state changed . . . . . . 2016-10-12 08:18:50Z [overcloud.AllNodesDeploySteps.ControllerDeployment_Step5]: CREATE_COMPLETE Stack CREATE completed successfully 2016-10-12 08:18:51Z [overcloud.AllNodesDeploySteps.ControllerDeployment_Step5]: CREATE_COMPLETE state changed 2016-10-12 08:18:51Z [overcloud.AllNodesDeploySteps.ComputePostConfig]: CREATE_IN_PROGRESS state changed 2016-10-12 08:18:51Z [overcloud.AllNodesDeploySteps.ControllerPostConfig]: CREATE_IN_PROGRESS state changed 2016-10-12 08:18:51Z [overcloud.AllNodesDeploySteps.BlockStoragePostConfig]: CREATE_IN_PROGRESS state changed 2016-10-12 08:18:51Z [overcloud.AllNodesDeploySteps.ObjectStoragePostConfig]: CREATE_IN_PROGRESS state changed 2016-10-12 08:18:51Z [overcloud.AllNodesDeploySteps.CephStoragePostConfig]: CREATE_IN_PROGRESS state changed 2016-10-12 08:18:53Z [overcloud.AllNodesDeploySteps.ControllerPostConfig]: CREATE_COMPLETE state changed 2016-10-12 08:18:53Z [overcloud.AllNodesDeploySteps.ObjectStoragePostConfig]: CREATE_COMPLETE state changed 2016-10-12 08:18:53Z [overcloud.ControllerAllNodesDeployment.0]: SIGNAL_COMPLETE Unknown 2016-10-12 08:18:54Z [overcloud.AllNodesDeploySteps.ComputePostConfig]: CREATE_COMPLETE state changed 2016-10-12 08:18:54Z [overcloud.AllNodesDeploySteps.BlockStoragePostConfig]: CREATE_COMPLETE state changed 2016-10-12 08:18:54Z [overcloud.AllNodesDeploySteps.CephStoragePostConfig]: CREATE_COMPLETE state changed 2016-10-12 08:18:54Z [overcloud.AllNodesDeploySteps.BlockStorageExtraConfigPost]: CREATE_IN_PROGRESS state changed 2016-10-12 08:18:55Z [overcloud.AllNodesDeploySteps.ComputeExtraConfigPost]: CREATE_IN_PROGRESS state changed 2016-10-12 08:18:55Z [overcloud.AllNodesDeploySteps.CephStorageExtraConfigPost]: CREATE_IN_PROGRESS state changed 2016-10-12 08:18:56Z [overcloud.AllNodesDeploySteps.ControllerExtraConfigPost]: CREATE_IN_PROGRESS state changed 2016-10-12 08:18:56Z [overcloud.Controller.0.ControllerDeployment]: SIGNAL_COMPLETE Unknown 2016-10-12 08:18:56Z [overcloud.AllNodesDeploySteps.ObjectStorageExtraConfigPost]: CREATE_IN_PROGRESS state changed 2016-10-12 08:18:58Z [overcloud.AllNodesDeploySteps.ComputeExtraConfigPost]: CREATE_COMPLETE state changed 2016-10-12 08:18:58Z [overcloud.AllNodesDeploySteps.BlockStorageExtraConfigPost]: CREATE_COMPLETE state changed 2016-10-12 08:18:59Z [overcloud.AllNodesDeploySteps.CephStorageExtraConfigPost]: CREATE_COMPLETE state changed 2016-10-12 08:18:59Z [overcloud.Controller.0.NetworkDeployment]: SIGNAL_COMPLETE Unknown 2016-10-12 08:18:59Z [overcloud.AllNodesDeploySteps.ControllerExtraConfigPost]: CREATE_COMPLETE state changed 2016-10-12 08:18:59Z [overcloud.AllNodesDeploySteps.ObjectStorageExtraConfigPost]: CREATE_COMPLETE state changed 2016-10-12 08:19:00Z [overcloud.AllNodesDeploySteps]: CREATE_COMPLETE Stack CREATE completed successfully 2016-10-12 08:19:01Z [overcloud.AllNodesDeploySteps]: CREATE_COMPLETE state changed 2016-10-12 08:19:01Z [overcloud]: CREATE_COMPLETE Stack CREATE completed successfully Stack overcloud CREATE_COMPLETE Overcloud Endpoint: http://10.0.0.10:5000/v2.0 Overcloud Deployed [stack@undercloud ~]$ . stackrc [stack@undercloud ~]$ nova list +--------------------------------------+-------------------------+--------+------------+-------------+---------------------+ | ID | Name | Status | Task State | Power State | Networks | +--------------------------------------+-------------------------+--------+------------+-------------+---------------------+ | e6951ba8-a467-4c54-a853-b1fa5f1f3d20 | overcloud-controller-0 | ACTIVE | - | Running | ctlplane=192.0.2.6 | | 1a4c436f-0aab-4fb3-bb86-34fbf38bec4a | overcloud-controller-1 | ACTIVE | - | Running | ctlplane=192.0.2.12 | | 5bc7e75d-2a99-4e73-b440-a37b6164c0b6 | overcloud-controller-2 | ACTIVE | - | Running | ctlplane=192.0.2.14 | | 6e379541-37de-4f3b-8667-fbe5284de10b | overcloud-novacompute-0 | ACTIVE | - | Running | ctlplane=192.0.2.11 | +--------------------------------------+-------------------------+--------+------------+-------------+---------------------+ [stack@undercloud ~]$ cat overcloudrc export OS_NO_CACHE=True export OS_CLOUDNAME=overcloud export OS_AUTH_URL=http://10.0.0.10:5000/v2.0 export NOVA_VERSION=1.1 export COMPUTE_API_VERSION=1.1 export OS_USERNAME=admin export no_proxy=,10.0.0.10,192.0.2.10 export OS_PASSWORD=8WvqWXUv4z3a4gdk2EdRzYuZU export PYTHONWARNINGS="ignore:Certificate has no, ignore:A true SSLContext object is not available" export OS_TENANT_NAME=admin

[stack@undercloud ~]$ ssh heat-admin@192.0.2.6

The authenticity of host '192.0.2.6 (192.0.2.6)' can't be established.

ECDSA key fingerprint is d1:71:51:eb:72:d2:50:fb:c6:30:13:49:0d:4b:c8:b1.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '192.0.2.6' (ECDSA) to the list of known hosts.

[heat-admin@overcloud-controller-0 ~]$ sudo su -

[root@overcloud-controller-0 ~]# vi overcloudrc

[root@overcloud-controller-0 ~]# . overcloudrc

[root@overcloud-controller-0 ~]# pcs status

Cluster name: tripleo_cluster

Last updated: Wed Oct 12 08:21:33 2016 Last change: Wed Oct 12 08:09:48 2016 by root via cibadmin on overcloud-controller-0

Stack: corosync

Current DC: overcloud-controller-2 (version 1.1.13-10.el7_2.4-44eb2dd) - partition with quorum

3 nodes and 19 resources configured

Online: [ overcloud-controller-0 overcloud-controller-1 overcloud-controller-2 ]

Full list of resources:

ip-10.0.0.10 (ocf::heartbeat:IPaddr2): Started overcloud-controller-0

ip-192.0.2.10 (ocf::heartbeat:IPaddr2): Started overcloud-controller-1

ip-172.16.2.5 (ocf::heartbeat:IPaddr2): Started overcloud-controller-2

Clone Set: haproxy-clone [haproxy]

Started: [ overcloud-controller-0 overcloud-controller-1 overcloud-controller-2 ]

Master/Slave Set: galera-master [galera]

Masters: [ overcloud-controller-0 overcloud-controller-1 overcloud-controller-2 ]

ip-172.16.1.11 (ocf::heartbeat:IPaddr2): Started overcloud-controller-0

ip-172.16.2.13 (ocf::heartbeat:IPaddr2): Started overcloud-controller-1

Clone Set: rabbitmq-clone [rabbitmq]

Started: [ overcloud-controller-0 overcloud-controller-1 overcloud-controller-2 ]

ip-172.16.3.10 (ocf::heartbeat:IPaddr2): Started overcloud-controller-2

Master/Slave Set: redis-master [redis]

Masters: [ overcloud-controller-0 ]

Slaves: [ overcloud-controller-1 overcloud-controller-2 ]

openstack-cinder-volume (systemd:openstack-cinder-volume): Started overcloud-controller-0

PCSD Status:

overcloud-controller-0: Online

overcloud-controller-1: Online

overcloud-controller-2: Online

Daemon Status:

corosync: active/enabled

pacemaker: active/enabled

pcsd: active/enabled

[root@overcloud-controller-0 ~]# clustercheck HTTP/1.1 200 OK Content-Type: text/plain Connection: close Content-Length: 32 Galera cluster node is synced.

Final "top" snapshot on VIRTHOST ( for QuickStart ) after same deployment as

was done in RDO Newton Overcloud HA deployment via instack-virt-setup on CentOS 7.2 VIRTHOST

Compare numbers under SHR header in reports provided down here.

Cloud F24 VM is running on overcloud-novacompute-0

Quite the same configuration been done by instack-virt-setup

Swap area utilization at least 2.5 GB ( up to 3.5 GB ) during cloud VM F24 runtime.

***************************************************************************************

System information provided via dashboard ( remote sshuttle connection )

***************************************************************************************

Network Configuration

No comments:

Post a Comment