Hacking standard tripleo-heat-template bellow was performed to avoid hitting "OSDs fewer than Replicas" cluster status.Corresponding template got discovered and updated before running

overcloud deployment. In meantime "ctlplane" is converted to 192.168.24.0/24

and br-ctlplane has IP 192.168.24.1 on both Mitaka and Newton.Changes

bellow obviously were done only due to limited hardware ,e.g. 4 Core CPU.

***************************************

Deployment ansible template ha.yml

***************************************

# Deploy an HA openstack environment.

control_memory: 7000

compute_memory: 6700

undercloud_memory: 8192

# Giving the undercloud additional CPUs can greatly improve heat's

# performance (and result in a shorter deploy time).

undercloud_vcpu: 4

# This enables TLS for the undercloud which will also make haproxy bind to the

# configured public-vip and admin-vip.

undercloud_generate_service_certificate: True

# Create three controller nodes and one compute node.

overcloud_nodes:

- name: control_0

flavor: control

- name: control_1

flavor: control

- name: control_2

flavor: control

- name: compute_0

flavor: compute

- name: ceph_0

flavor: ceph

- name: ceph_1

flavor: ceph

# We intend test inrospection in VENV

step_introspect: true

# Tell tripleo about our environment.

network_isolation: true

extra_args: >-

--control-scale 3

--compute-scale 1

--ceph-storage-scale 2

--neutron-network-type vxlan

--neutron-tunnel-types vxlan

--ntp-server pool.ntp.org

-e {{overcloud_templates_path}}/environments/storage-environment.yaml

test_ping: true

enable_pacemaker: true

run_tempest: false

[john@fedora24wks tripleo-quickstart]$ bash quickstart.sh -R newton --config ./config/general_config/ha.yml $VIRTHOST

Follow http://dbaxps.blogspot.com/2016/10/rdo-newton-overcloud-ha-deployment-via_28.html

until overcloud deployment

********************************************************************************

Due to QuickStart is using prebuilt images just upload them right away

********************************************************************************

[stack@undercloud ~]$ openstack overcloud image upload

WARNING: openstackclient.common.utils is deprecated and will be removed after Jun 2017. Please use osc_lib.utils

Image "overcloud-full-vmlinuz" was uploaded.

[stack@undercloud ~]$ openstack baremetal import instackenv.json

WARNING: openstackclient.common.utils is deprecated and will be removed after Jun 2017. Please use osc_lib.utils

Started Mistral Workflow. Execution ID: 64385e35-5f80-4503-a879-c506cf58e204

Successfully registered node UUID ebcfcf99-6abc-4371-a238-1b1d8314e6ed

Successfully registered node UUID 58a9513b-6f72-4428-975d-efaac8e5a832

Successfully registered node UUID 400d8da7-f8c0-4f5a-8053-e85e8848d49a

Successfully registered node UUID 4af7e1ce-7eff-4f3e-8602-4bbe60d749d4

Successfully registered node UUID 270082e3-69a8-4b09-bcd3-b14de96e8144

Started Mistral Workflow. Execution ID: 4dc751ba-6297-4bbe-87cc-2493a9cb763a

Successfully set all nodes to available.

[stack@undercloud ~]$ openstack baremetal configure boot

WARNING: openstackclient.common.utils is deprecated and will be removed after Jun 2017. Please use osc_lib.utils

[stack@undercloud ~]$ openstack baremetal introspection bulk start

WARNING: openstackclient.common.utils is deprecated and will be removed after Jun 2017. Please use osc_lib.utils

Setting nodes for introspection to manageable...

Starting introspection of manageable nodes

Started Mistral Workflow. Execution ID: 5d9477c4-6f47-4188-afd6-0526c744693e

Waiting for introspection to finish...

Introspection for UUID 4af7e1ce-7eff-4f3e-8602-4bbe60d749d4 finished successfully.

Introspection for UUID 58a9513b-6f72-4428-975d-efaac8e5a832 finished successfully.

Introspection for UUID ebcfcf99-6abc-4371-a238-1b1d8314e6ed finished successfully.

Introspection for UUID 400d8da7-f8c0-4f5a-8053-e85e8848d49a finished successfully.

Introspection for UUID 270082e3-69a8-4b09-bcd3-b14de96e8144 finished successfully.

Introspection completed.

Setting manageable nodes to available...

Started Mistral Workflow. Execution ID: 4bfa1355-923b-4bcc-8ea4-998016716740

********************************************************************************

Here we are forced to set up interface for external network manually

********************************************************************************

[stack@undercloud ~]$ sudo vi /etc/sysconfig/network-scripts/ifcfg-vlan10

DEVICE=vlan10

ONBOOT=yes

DEVICETYPE=ovs

TYPE=OVSIntPort

BOOTPROTO=static

IPADDR=10.0.0.1

NETMASK=255.255.255.0

OVS_BRIDGE=br-ctlplane

OVS_OPTIONS="tag=10"

[stack@undercloud ~]$ sudo ifup vlan10

*****************************************************************************

At this point hack detected template before running overcloud-deploy.sh

First find template with entry "osd_pool_default_size"

*****************************************************************************

# cd /usr/share/openstack-tripleo-heat-templates/

for V in `find . -name "ceph*.yaml" -print`

do

echo $V ;

cat $V | grep "osd_pool_default_size" ;

done

********************************************************************************************

Next $ sudo vi /usr/share/openstack-tripleo-heat-templates/puppet/services/ceph-mon.yaml , update line - ceph::profile::params::osd_pool_default_size: 2

********************************************************************************************

outputs:

role_data:

description: Role data for the Ceph Monitor service.

value:

service_name: ceph_mon

monitoring_subscription: {get_param: MonitoringSubscriptionCephMon}

config_settings:

map_merge:

- get_attr: [CephBase, role_data, config_settings]

- ceph::profile::params::ms_bind_ipv6: {get_param: CephIPv6}

ceph::profile::params::mon_key: {get_param: CephMonKey}

ceph::profile::params::osd_pool_default_pg_num: 32

ceph::profile::params::osd_pool_default_pgp_num: 32

ceph::profile::params::osd_pool_default_size: 2 <== instead of "3"

# repeat returns items in a list, so we need to map_merge twice

tripleo::profile::base::ceph::mon::ceph_pools:

map_merge:

- map_merge:

repeat:

for_each:

<%pool%>:

- {get_param: CinderRbdPoolName}

- {get_param: CinderBackupRbdPoolName}

- {get_param: NovaRbdPoolName}

- {get_param: GlanceRbdPoolName}

- {get_param: GnocchiRbdPoolName}

******************

Create file

******************

[stack@undercloud ~]$ vi $HOME/network_env.yaml

{

"parameter_defaults": {

"ControlPlaneDefaultRoute": "192.168.24.1",

"ControlPlaneSubnetCidr": "24",

"DnsServers": [

"8.8.8.8"

],

"EC2MetadataIp": "192.168.24.1",

"ExternalAllocationPools": [

{

"end": "10.0.0.250",

"start": "10.0.0.4"

}

],

"ExternalNetCidr": "10.0.0.1/24",

"NeutronExternalNetworkBridge": ""

}

}

[stack@undercloud ~]$ sudo iptables -A BOOTSTACK_MASQ -s 10.0.0.0/24 ! -d 10.0.0.0/24 -j MASQUERADE -t nat

[stack@undercloud ~]$ sudo touch -f /usr/share/openstack-tripleo-heat-templates/puppet/post.yaml

**********************************

Script overcloud-deploy.sh

**********************************

Raw text overcloud-deploy.sh

stack$ ./overcloud-deploy.sh

. . . . . . . .

[stack@undercloud ~]$ nova-manage --version

14.0.2

[stack@undercloud ~]$ date

Fri Nov 4 11:01:27 UTC 2016

[stack@undercloud ~]$ nova list

[stack@undercloud ~]$ ssh heat-admin@192.168.24.16

Last login: Fri Nov 4 10:56:55 2016 from 192.168.24.1

[heat-admin@overcloud-controller-0 ~]$ sudo su -

Last login: Fri Nov 4 10:56:56 UTC 2016 on pts/0

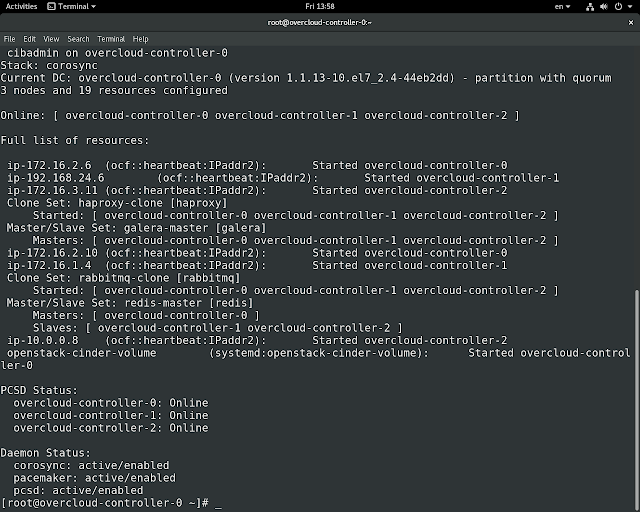

[root@overcloud-controller-0 ~]# pcs status

Cluster name: tripleo_cluster

Last updated: Fri Nov 4 11:02:21 2016 Last change: Fri Nov 4 10:34:24 2016 by root via cibadmin on overcloud-controller-0

Stack: corosync

Current DC: overcloud-controller-0 (version 1.1.13-10.el7_2.4-44eb2dd) - partition with quorum

3 nodes and 19 resources configured

Online: [ overcloud-controller-0 overcloud-controller-1 overcloud-controller-2 ]

Full list of resources:

ip-172.16.2.6 (ocf::heartbeat:IPaddr2): Started overcloud-controller-0

ip-192.168.24.6 (ocf::heartbeat:IPaddr2): Started overcloud-controller-1

ip-172.16.3.11 (ocf::heartbeat:IPaddr2): Started overcloud-controller-2

Clone Set: haproxy-clone [haproxy]

Started: [ overcloud-controller-0 overcloud-controller-1 overcloud-controller-2 ]

Master/Slave Set: galera-master [galera]

Masters: [ overcloud-controller-0 overcloud-controller-1 overcloud-controller-2 ]

ip-172.16.2.10 (ocf::heartbeat:IPaddr2): Started overcloud-controller-0

ip-172.16.1.4 (ocf::heartbeat:IPaddr2): Started overcloud-controller-1

Clone Set: rabbitmq-clone [rabbitmq]

Started: [ overcloud-controller-0 overcloud-controller-1 overcloud-controller-2 ]

Master/Slave Set: redis-master [redis]

Masters: [ overcloud-controller-0 ]

Slaves: [ overcloud-controller-1 overcloud-controller-2 ]

ip-10.0.0.8 (ocf::heartbeat:IPaddr2): Started overcloud-controller-2

openstack-cinder-volume (systemd:openstack-cinder-volume): Started overcloud-controller-0

PCSD Status:

overcloud-controller-0: Online

overcloud-controller-1: Online

overcloud-controller-2: Online

Daemon Status:

corosync: active/enabled

pacemaker: active/enabled

pcsd: active/enabled

[root@overcloud-controller-0 ~]# clustercheck

HTTP/1.1 200 OK

Content-Type: text/plain

Connection: close

Content-Length: 32

Galera cluster node is synced.

[root@overcloud-controller-0 ~]# ceph status

cluster 1e579a4c-a273-11e6-905a-000b33bd457e

health HEALTH_OK

monmap e1: 3 mons at {overcloud-controller-0=172.16.1.11:6789/0,overcloud-controller-1=172.16.1.5:6789/0,overcloud-controller-2=172.16.1.8:6789/0}

election epoch 4, quorum 0,1,2 overcloud-controller-1,overcloud-controller-2,overcloud-controller-0

osdmap e20: 2 osds: 2 up, 2 in

flags sortbitwise

pgmap v135: 224 pgs, 6 pools, 0 bytes data, 0 objects

17063 MB used, 85312 MB / 102375 MB avail

224 active+clean

To eliminate issues lke :-

[root@overcloud-controller-1 ~]# ceph status

cluster a24eec1a-a1bf-11e6-bdb7-00d38065a6b2

health HEALTH_WARN

clock skew detected on mon.overcloud-controller-1, mon.overcloud-controller-0

*******************************************************************************************

DNS resolution should be properly setup on every HA Controller in PCS Cluster, ntpd.service status should be a kind of shown bellow

*******************************************************************************************

on each one of controllers, e.g. no problems to sync with pool.ntp.org

[root@overcloud-controller-0 ~]# ceph osd df tree

[root@overcloud-controller-0 ~]# ceph osd df plain

[root@overcloud-controller-0 ~]# ceph health detail --format=json-pretty

{

"health": {

"health_services": [

{

"mons": [

{

"name": "overcloud-controller-1",

"kb_total": 52416312,

"kb_used": 8275972,

"kb_avail": 44140340,

"avail_percent": 84,

"last_updated": "2016-11-04 11:09:24.781835",

"store_stats": {

"bytes_total": 9438094,

"bytes_sst": 894,

"bytes_log": 9371648,

"bytes_misc": 65552,

"last_updated": "0.000000"

},

"health": "HEALTH_OK"

},

{

"name": "overcloud-controller-2",

"kb_total": 52416312,

"kb_used": 8280564,

"kb_avail": 44135748,

"avail_percent": 84,

"last_updated": "2016-11-04 11:09:23.999622",

"store_stats": {

"bytes_total": 13632398,

"bytes_sst": 894,

"bytes_log": 13565952,

"bytes_misc": 65552,

"last_updated": "0.000000"

},

"health": "HEALTH_OK"

},

{

"name": "overcloud-controller-0",

"kb_total": 52416312,

"kb_used": 8283720,

"kb_avail": 44132592,

"avail_percent": 84,

"last_updated": "2016-11-04 11:09:20.601992",

"store_stats": {

"bytes_total": 13632398,

"bytes_sst": 894,

"bytes_log": 13565952,

"bytes_misc": 65552,

"last_updated": "0.000000"

},

"health": "HEALTH_OK"

}

]

}

]

},

"timechecks": {

"epoch": 4,

"round": 32,

"round_status": "finished",

"mons": [

{

"name": "overcloud-controller-1",

"skew": 0.000000,

"latency": 0.000000,

"health": "HEALTH_OK"

},

{

"name": "overcloud-controller-2",

"skew": -0.003007,

"latency": 0.003155,

"health": "HEALTH_OK"

},

{

"name": "overcloud-controller-0",

"skew": -0.000858,

"latency": 0.003621,

"health": "HEALTH_OK"

}

]

},

"summary": [],

"overall_status": "HEALTH_OK",

"detail": []

}

[root@overcloud-controller-0 ~]# glance image-list

[root@overcloud-controller-0 ~]# cinder list

[root@overcloud-controller-0 ~]# rbd -p volumes ls

volume-9b4af56e-081a-41dc-b411-5e4ba9be6509

volume-eed2f101-53ef-4b92-a79b-2eb496e885b2

[root@overcloud-controller-0 ~]# ceph osd df tree

***************************************

Deployment ansible template ha.yml

***************************************

# Deploy an HA openstack environment.

control_memory: 7000

compute_memory: 6700

undercloud_memory: 8192

# Giving the undercloud additional CPUs can greatly improve heat's

# performance (and result in a shorter deploy time).

undercloud_vcpu: 4

# This enables TLS for the undercloud which will also make haproxy bind to the

# configured public-vip and admin-vip.

undercloud_generate_service_certificate: True

# Create three controller nodes and one compute node.

overcloud_nodes:

- name: control_0

flavor: control

- name: control_1

flavor: control

- name: control_2

flavor: control

- name: compute_0

flavor: compute

- name: ceph_0

flavor: ceph

- name: ceph_1

flavor: ceph

# We intend test inrospection in VENV

step_introspect: true

# Tell tripleo about our environment.

network_isolation: true

extra_args: >-

--control-scale 3

--compute-scale 1

--ceph-storage-scale 2

--neutron-network-type vxlan

--neutron-tunnel-types vxlan

--ntp-server pool.ntp.org

-e {{overcloud_templates_path}}/environments/storage-environment.yaml

test_ping: true

enable_pacemaker: true

run_tempest: false

[john@fedora24wks tripleo-quickstart]$ bash quickstart.sh -R newton --config ./config/general_config/ha.yml $VIRTHOST

Follow http://dbaxps.blogspot.com/2016/10/rdo-newton-overcloud-ha-deployment-via_28.html

until overcloud deployment

********************************************************************************

Due to QuickStart is using prebuilt images just upload them right away

********************************************************************************

[stack@undercloud ~]$ openstack overcloud image upload

WARNING: openstackclient.common.utils is deprecated and will be removed after Jun 2017. Please use osc_lib.utils

Image "overcloud-full-vmlinuz" was uploaded.

+--------------------------------------+------------------------+-------------+---------+--------+ | ID | Name | Disk Format | Size | Status | +--------------------------------------+------------------------+-------------+---------+--------+ | 37c9316c-ac1a-4bac-8bf7-d813781a84b1 | overcloud-full-vmlinuz | aki | 5158864 | active | +--------------------------------------+------------------------+-------------+---------+--------+ Image "overcloud-full-initrd" was uploaded. +--------------------------------------+-----------------------+-------------+----------+--------+ | ID | Name | Disk Format | Size | Status | +--------------------------------------+-----------------------+-------------+----------+--------+ | f9a38d40-2e27-4954-b032-f27d5ada42be | overcloud-full-initrd | ari | 41989212 | active | +--------------------------------------+-----------------------+-------------+----------+--------+ Image "overcloud-full" was uploaded. +--------------------------------------+----------------+-------------+------------+--------+ | ID | Name | Disk Format | Size | Status | +--------------------------------------+----------------+-------------+------------+--------+ | 6827dbfa-d0dc-4867-ac96-27af9f04779c | overcloud-full | qcow2 | 1295537152 | active | +--------------------------------------+----------------+-------------+------------+--------+ Image "bm-deploy-kernel" was uploaded. +--------------------------------------+------------------+-------------+---------+--------+ | ID | Name | Disk Format | Size | Status | +--------------------------------------+------------------+-------------+---------+--------+ | ed26dc05-6c9a-41d2-a023-da1ed3dcb54f | bm-deploy-kernel | aki | 5158864 | active | +--------------------------------------+------------------+-------------+---------+--------+ Image "bm-deploy-ramdisk" was uploaded. +--------------------------------------+-------------------+-------------+-----------+--------+ | ID | Name | Disk Format | Size | Status | +--------------------------------------+-------------------+-------------+-----------+--------+ | cb26e290-96ed-447e-8b4d-2225c0fc2fe5 | bm-deploy-ramdisk | ari | 407314528 | active | +--------------------------------------+-------------------+-------------+-----------+--------+

[stack@undercloud ~]$ openstack baremetal import instackenv.json

WARNING: openstackclient.common.utils is deprecated and will be removed after Jun 2017. Please use osc_lib.utils

Started Mistral Workflow. Execution ID: 64385e35-5f80-4503-a879-c506cf58e204

Successfully registered node UUID ebcfcf99-6abc-4371-a238-1b1d8314e6ed

Successfully registered node UUID 58a9513b-6f72-4428-975d-efaac8e5a832

Successfully registered node UUID 400d8da7-f8c0-4f5a-8053-e85e8848d49a

Successfully registered node UUID 4af7e1ce-7eff-4f3e-8602-4bbe60d749d4

Successfully registered node UUID 270082e3-69a8-4b09-bcd3-b14de96e8144

Started Mistral Workflow. Execution ID: 4dc751ba-6297-4bbe-87cc-2493a9cb763a

Successfully set all nodes to available.

[stack@undercloud ~]$ openstack baremetal configure boot

WARNING: openstackclient.common.utils is deprecated and will be removed after Jun 2017. Please use osc_lib.utils

[stack@undercloud ~]$ openstack baremetal introspection bulk start

WARNING: openstackclient.common.utils is deprecated and will be removed after Jun 2017. Please use osc_lib.utils

Setting nodes for introspection to manageable...

Starting introspection of manageable nodes

Started Mistral Workflow. Execution ID: 5d9477c4-6f47-4188-afd6-0526c744693e

Waiting for introspection to finish...

Introspection for UUID 4af7e1ce-7eff-4f3e-8602-4bbe60d749d4 finished successfully.

Introspection for UUID 58a9513b-6f72-4428-975d-efaac8e5a832 finished successfully.

Introspection for UUID ebcfcf99-6abc-4371-a238-1b1d8314e6ed finished successfully.

Introspection for UUID 400d8da7-f8c0-4f5a-8053-e85e8848d49a finished successfully.

Introspection for UUID 270082e3-69a8-4b09-bcd3-b14de96e8144 finished successfully.

Introspection completed.

Setting manageable nodes to available...

Started Mistral Workflow. Execution ID: 4bfa1355-923b-4bcc-8ea4-998016716740

********************************************************************************

Here we are forced to set up interface for external network manually

********************************************************************************

[stack@undercloud ~]$ sudo vi /etc/sysconfig/network-scripts/ifcfg-vlan10

DEVICE=vlan10

ONBOOT=yes

DEVICETYPE=ovs

TYPE=OVSIntPort

BOOTPROTO=static

IPADDR=10.0.0.1

NETMASK=255.255.255.0

OVS_BRIDGE=br-ctlplane

OVS_OPTIONS="tag=10"

[stack@undercloud ~]$ sudo ifup vlan10

*****************************************************************************

At this point hack detected template before running overcloud-deploy.sh

First find template with entry "osd_pool_default_size"

*****************************************************************************

# cd /usr/share/openstack-tripleo-heat-templates/

for V in `find . -name "ceph*.yaml" -print`

do

echo $V ;

cat $V | grep "osd_pool_default_size" ;

done

********************************************************************************************

Next $ sudo vi /usr/share/openstack-tripleo-heat-templates/puppet/services/ceph-mon.yaml , update line - ceph::profile::params::osd_pool_default_size: 2

********************************************************************************************

outputs:

role_data:

description: Role data for the Ceph Monitor service.

value:

service_name: ceph_mon

monitoring_subscription: {get_param: MonitoringSubscriptionCephMon}

config_settings:

map_merge:

- get_attr: [CephBase, role_data, config_settings]

- ceph::profile::params::ms_bind_ipv6: {get_param: CephIPv6}

ceph::profile::params::mon_key: {get_param: CephMonKey}

ceph::profile::params::osd_pool_default_pg_num: 32

ceph::profile::params::osd_pool_default_pgp_num: 32

ceph::profile::params::osd_pool_default_size: 2 <== instead of "3"

# repeat returns items in a list, so we need to map_merge twice

tripleo::profile::base::ceph::mon::ceph_pools:

map_merge:

- map_merge:

repeat:

for_each:

<%pool%>:

- {get_param: CinderRbdPoolName}

- {get_param: CinderBackupRbdPoolName}

- {get_param: NovaRbdPoolName}

- {get_param: GlanceRbdPoolName}

- {get_param: GnocchiRbdPoolName}

******************

Create file

******************

[stack@undercloud ~]$ vi $HOME/network_env.yaml

{

"parameter_defaults": {

"ControlPlaneDefaultRoute": "192.168.24.1",

"ControlPlaneSubnetCidr": "24",

"DnsServers": [

"8.8.8.8"

],

"EC2MetadataIp": "192.168.24.1",

"ExternalAllocationPools": [

{

"end": "10.0.0.250",

"start": "10.0.0.4"

}

],

"ExternalNetCidr": "10.0.0.1/24",

"NeutronExternalNetworkBridge": ""

}

}

[stack@undercloud ~]$ sudo iptables -A BOOTSTACK_MASQ -s 10.0.0.0/24 ! -d 10.0.0.0/24 -j MASQUERADE -t nat

[stack@undercloud ~]$ sudo touch -f /usr/share/openstack-tripleo-heat-templates/puppet/post.yaml

**********************************

Script overcloud-deploy.sh

**********************************

#!/bin/bash -x source /home/stack/stackrc openstack overcloud deploy \ --control-scale 3 --compute-scale 1 --ceph-storage-scale 2 \ --libvirt-type qemu \ --ntp-server pool.ntp.org \ --templates /usr/share/openstack-tripleo-heat-templates \ -e /usr/share/openstack-tripleo-heat-templates/environments/puppet-pacemaker.yaml \ -e /usr/share/openstack-tripleo-heat-templates/environments/storage-environment.yaml \ -e /usr/share/openstack-tripleo-heat-templates/environments/network-isolation.yaml \ -e /usr/share/openstack-tripleo-heat-templates/environments/net-single-nic-with-vlans.yaml \ -e $HOME/network_env.yaml

Raw text overcloud-deploy.sh

stack$ ./overcloud-deploy.sh

. . . . . . . .

[stack@undercloud ~]$ nova-manage --version

14.0.2

[stack@undercloud ~]$ date

Fri Nov 4 11:01:27 UTC 2016

[stack@undercloud ~]$ nova list

+--------------------------------------+-------------------------+--------+------------+-------------+------------------------+ | ID | Name | Status | Task State | Power State | Networks | +--------------------------------------+-------------------------+--------+------------+-------------+------------------------+ | 0693565d-0b43-4e17-b26c-8cca59871cbd | overcloud-cephstorage-0 | ACTIVE | - | Running | ctlplane=192.168.24.13 | | a7930ce9-80ca-4ebb-85f3-86acb7311961 | overcloud-cephstorage-1 | ACTIVE | - | Running | ctlplane=192.168.24.14 | | cfe855a3-34cf-4a9e-ba55-5da1f4c0772f | overcloud-controller-0 | ACTIVE | - | Running | ctlplane=192.168.24.16 | | 066142e0-557d-4f69-8054-d0221d8e99e7 | overcloud-controller-1 | ACTIVE | - | Running | ctlplane=192.168.24.15 | | 7c97d1f3-0ffd-4939-b12e-8b50f2f3ad81 | overcloud-controller-2 | ACTIVE | - | Running | ctlplane=192.168.24.7 | | 49df48cf-faae-41ac-9bd2-cbf59d33d917 | overcloud-novacompute-0 | ACTIVE | - | Running | ctlplane=192.168.24.11 | +--------------------------------------+-------------------------+--------+------------+-------------+------------------------+

[stack@undercloud ~]$ ssh heat-admin@192.168.24.16

Last login: Fri Nov 4 10:56:55 2016 from 192.168.24.1

[heat-admin@overcloud-controller-0 ~]$ sudo su -

Last login: Fri Nov 4 10:56:56 UTC 2016 on pts/0

[root@overcloud-controller-0 ~]# pcs status

Cluster name: tripleo_cluster

Last updated: Fri Nov 4 11:02:21 2016 Last change: Fri Nov 4 10:34:24 2016 by root via cibadmin on overcloud-controller-0

Stack: corosync

Current DC: overcloud-controller-0 (version 1.1.13-10.el7_2.4-44eb2dd) - partition with quorum

3 nodes and 19 resources configured

Online: [ overcloud-controller-0 overcloud-controller-1 overcloud-controller-2 ]

Full list of resources:

ip-172.16.2.6 (ocf::heartbeat:IPaddr2): Started overcloud-controller-0

ip-192.168.24.6 (ocf::heartbeat:IPaddr2): Started overcloud-controller-1

ip-172.16.3.11 (ocf::heartbeat:IPaddr2): Started overcloud-controller-2

Clone Set: haproxy-clone [haproxy]

Started: [ overcloud-controller-0 overcloud-controller-1 overcloud-controller-2 ]

Master/Slave Set: galera-master [galera]

Masters: [ overcloud-controller-0 overcloud-controller-1 overcloud-controller-2 ]

ip-172.16.2.10 (ocf::heartbeat:IPaddr2): Started overcloud-controller-0

ip-172.16.1.4 (ocf::heartbeat:IPaddr2): Started overcloud-controller-1

Clone Set: rabbitmq-clone [rabbitmq]

Started: [ overcloud-controller-0 overcloud-controller-1 overcloud-controller-2 ]

Master/Slave Set: redis-master [redis]

Masters: [ overcloud-controller-0 ]

Slaves: [ overcloud-controller-1 overcloud-controller-2 ]

ip-10.0.0.8 (ocf::heartbeat:IPaddr2): Started overcloud-controller-2

openstack-cinder-volume (systemd:openstack-cinder-volume): Started overcloud-controller-0

PCSD Status:

overcloud-controller-0: Online

overcloud-controller-1: Online

overcloud-controller-2: Online

Daemon Status:

corosync: active/enabled

pacemaker: active/enabled

pcsd: active/enabled

[root@overcloud-controller-0 ~]# clustercheck

HTTP/1.1 200 OK

Content-Type: text/plain

Connection: close

Content-Length: 32

Galera cluster node is synced.

[root@overcloud-controller-0 ~]# ceph status

cluster 1e579a4c-a273-11e6-905a-000b33bd457e

health HEALTH_OK

monmap e1: 3 mons at {overcloud-controller-0=172.16.1.11:6789/0,overcloud-controller-1=172.16.1.5:6789/0,overcloud-controller-2=172.16.1.8:6789/0}

election epoch 4, quorum 0,1,2 overcloud-controller-1,overcloud-controller-2,overcloud-controller-0

osdmap e20: 2 osds: 2 up, 2 in

flags sortbitwise

pgmap v135: 224 pgs, 6 pools, 0 bytes data, 0 objects

17063 MB used, 85312 MB / 102375 MB avail

224 active+clean

To eliminate issues lke :-

[root@overcloud-controller-1 ~]# ceph status

cluster a24eec1a-a1bf-11e6-bdb7-00d38065a6b2

health HEALTH_WARN

clock skew detected on mon.overcloud-controller-1, mon.overcloud-controller-0

*******************************************************************************************

DNS resolution should be properly setup on every HA Controller in PCS Cluster, ntpd.service status should be a kind of shown bellow

*******************************************************************************************

[root@overcloud-controller-0 ~]# systemctl status ntpd.service ● ntpd.service - Network Time Service Loaded: loaded (/usr/lib/systemd/system/ntpd.service; enabled; vendor preset: disabled) Active: active (running) since Fri 2016-11-04 10:54:33 UTC; 5h 41min ago Process: 9693 ExecStart=/usr/sbin/ntpd -u ntp:ntp $OPTIONS (code=exited, status=0/SUCCESS) Main PID: 9694 (ntpd) CGroup: /system.slice/ntpd.service └─9694 /usr/sbin/ntpd -u ntp:ntp -g Nov 04 10:54:33 overcloud-controller-0 ntpd[9694]: Listen normally on 16 vlan10 fe80::2807:8...23 Nov 04 10:54:33 overcloud-controller-0 ntpd[9694]: Listen normally on 17 vlan40 fe80::543e:1...23 Nov 04 10:54:33 overcloud-controller-0 ntpd[9694]: Listen normally on 18 vlan20 fe80::802c:b...23 Nov 04 10:54:33 overcloud-controller-0 ntpd[9694]: Listening on routing socket on fd #35 for...es Nov 04 10:54:33 overcloud-controller-0 ntpd[9694]: 0.0.0.0 c016 06 restart Nov 04 10:54:33 overcloud-controller-0 ntpd[9694]: 0.0.0.0 c012 02 freq_set kernel 0.000 PPM Nov 04 10:54:33 overcloud-controller-0 ntpd[9694]: 0.0.0.0 c011 01 freq_not_set Nov 04 10:54:34 overcloud-controller-0 ntpd[9694]: 0.0.0.0 c614 04 freq_mode Nov 04 11:11:01 overcloud-controller-0 ntpd[9694]: 0.0.0.0 0612 02 freq_set kernel -6.429 PPM Nov 04 11:11:01 overcloud-controller-0 ntpd[9694]: 0.0.0.0 0615 05 clock_sync Hint: Some lines were ellipsized, use -l to show in full.

on each one of controllers, e.g. no problems to sync with pool.ntp.org

[root@overcloud-controller-0 ~]# ceph osd df tree

ID WEIGHT REWEIGHT SIZE USE AVAIL %USE VAR PGS TYPE NAME -1 0.09760 - 102375M 17063M 85312M 16.67 1.00 0 root default -2 0.04880 - 51187M 8532M 42655M 16.67 1.00 0 host overcloud-cephstorage-1 0 0.04880 1.00000 51187M 8532M 42655M 16.67 1.00 224 osd.0 -3 0.04880 - 51187M 8531M 42656M 16.67 1.00 0 host overcloud-cephstorage-0 1 0.04880 1.00000 51187M 8531M 42656M 16.67 1.00 224 osd.1 TOTAL 102375M 17063M 85312M 16.67 MIN/MAX VAR: 1.00/1.00 STDDEV: 0

[root@overcloud-controller-0 ~]# ceph osd df plain

ID WEIGHT REWEIGHT SIZE USE AVAIL %USE VAR PGS 0 0.04880 1.00000 51187M 8532M 42655M 16.67 1.00 224 1 0.04880 1.00000 51187M 8531M 42656M 16.67 1.00 224 TOTAL 102375M 17063M 85312M 16.67 MIN/MAX VAR: 1.00/1.00 STDDEV: 0

[root@overcloud-controller-0 ~]# ceph health detail --format=json-pretty

{

"health": {

"health_services": [

{

"mons": [

{

"name": "overcloud-controller-1",

"kb_total": 52416312,

"kb_used": 8275972,

"kb_avail": 44140340,

"avail_percent": 84,

"last_updated": "2016-11-04 11:09:24.781835",

"store_stats": {

"bytes_total": 9438094,

"bytes_sst": 894,

"bytes_log": 9371648,

"bytes_misc": 65552,

"last_updated": "0.000000"

},

"health": "HEALTH_OK"

},

{

"name": "overcloud-controller-2",

"kb_total": 52416312,

"kb_used": 8280564,

"kb_avail": 44135748,

"avail_percent": 84,

"last_updated": "2016-11-04 11:09:23.999622",

"store_stats": {

"bytes_total": 13632398,

"bytes_sst": 894,

"bytes_log": 13565952,

"bytes_misc": 65552,

"last_updated": "0.000000"

},

"health": "HEALTH_OK"

},

{

"name": "overcloud-controller-0",

"kb_total": 52416312,

"kb_used": 8283720,

"kb_avail": 44132592,

"avail_percent": 84,

"last_updated": "2016-11-04 11:09:20.601992",

"store_stats": {

"bytes_total": 13632398,

"bytes_sst": 894,

"bytes_log": 13565952,

"bytes_misc": 65552,

"last_updated": "0.000000"

},

"health": "HEALTH_OK"

}

]

}

]

},

"timechecks": {

"epoch": 4,

"round": 32,

"round_status": "finished",

"mons": [

{

"name": "overcloud-controller-1",

"skew": 0.000000,

"latency": 0.000000,

"health": "HEALTH_OK"

},

{

"name": "overcloud-controller-2",

"skew": -0.003007,

"latency": 0.003155,

"health": "HEALTH_OK"

},

{

"name": "overcloud-controller-0",

"skew": -0.000858,

"latency": 0.003621,

"health": "HEALTH_OK"

}

]

},

"summary": [],

"overall_status": "HEALTH_OK",

"detail": []

}

[root@overcloud-controller-0 ~]# glance image-list

+--------------------------------------+---------------+ | ID | Name | +--------------------------------------+---------------+ | c8ea9e70-b5d5-48e8-b571-b7fa406be066 | CentOS72Cloud | | aedec5fb-fe33-4fee-ba06-6e64d2e0020b | VF24Cloud | +--------------------------------------+---------------+ [root@overcloud-controller-0 ~]# rbd -p images ls aedec5fb-fe33-4fee-ba06-6e64d2e0020b c8ea9e70-b5d5-48e8-b571-b7fa406be066

[root@overcloud-controller-0 ~]# cinder list

+--------------------------------------+--------+----------------+------+-------------+----------+--------------------------------------+ | ID | Status | Name | Size | Volume Type | Bootable | Attached to | +--------------------------------------+--------+----------------+------+-------------+----------+--------------------------------------+ | 9b4af56e-081a-41dc-b411-5e4ba9be6509 | in-use | vf24volume | 7 | - | true | c163494d-3ef6-4499-acc6-0116c96d0def | | eed2f101-53ef-4b92-a79b-2eb496e885b2 | in-use | centos72volume | 10 | - | true | 14296835-a473-41ae-a636-11d312a0a51c | +--------------------------------------+--------+----------------+------+-------------+----------+--------------------------------------+

[root@overcloud-controller-0 ~]# rbd -p volumes ls

volume-9b4af56e-081a-41dc-b411-5e4ba9be6509

volume-eed2f101-53ef-4b92-a79b-2eb496e885b2

[root@overcloud-controller-0 ~]# ceph osd df tree

ID WEIGHT REWEIGHT SIZE USE AVAIL %USE VAR PGS TYPE NAME

-1 0.09760 - 102375M 24031M 78344M 23.47 1.00 0 root default

-2 0.04880 - 51187M 12015M 39172M 23.47 1.00 0 host overcloud-cephstorage-1

0 0.04880 1.00000 51187M 12015M 39172M 23.47 1.00 224 osd.0

-3 0.04880 - 51187M 12015M 39172M 23.47 1.00 0 host overcloud-cephstorage-0

1 0.04880 1.00000 51187M 12015M 39172M 23.47 1.00 224 osd.1

TOTAL 102375M 24031M 78344M 23.47

MIN/MAX VAR: 1.00/1.00 STDDEV: 0

[root@overcloud-controller-0 ~]# ceph mon_status

{"name":"overcloud-controller-1","rank":0,"state":"leader","election_epoch":4,"quorum":[0,1,2],

"outside_quorum": [],"extra_probe_peers":["172.16.1.8:6789\/0","172.16.1.11:6789\/0"],

"sync_provider":[],"monmap":

{"epoch":1,"fsid":"1e579a4c-a273-11e6-905a-000b33bd457e","modified":"2016-11-04

10:18:23.686836","created":"2016-11-04 10:18:23.686836","mons":[{"rank":0,"name":"overcloud-controller-

1","addr":"172.16.1.5:6789\/0"},{"rank":1,"name":"overcloud-controller-2","addr":"172.16.1.8:6789\/0"},

{"rank":2,"name":"overcloud-controller-0","addr":"172.16.1.11:6789\/0"}]}}

[root@overcloud-controller-0 ~]# ceph quorum_status

{"election_epoch":4,"quorum":[0,1,2],"quorum_names":["overcloud-controller-1","overcloud-controller-2",

"overcloud-controller-0"],"quorum_leader_name":"overcloud-controller-1","monmap":{"epoch":1,"fsid":

"1e579a4c-a273-11e6-905a-000b33bd457e","modified":"2016-11-04 10:18:23.686836","created":"2016-11-04

10:18:23.686836","mons":[{"rank":0,"name":"overcloud-controller-1","addr":"172.16.1.5:6789\/0"},

{"rank":1,"name":"overcloud-controller-2","addr":"172.16.1.8:6789\/0"},{"rank":2,"name":

"overcloud-controller-0","addr":"172.16.1.11:6789\/0"}]}}

Htop reporting

No comments:

Post a Comment